Google Gemini VS ChatGPT across seven standard criteria to determine who is AI No.1

Which is more outstanding, Google's Gemini large model or OpenAI's GPT?

How much progress has Gemini made compared to Google's previous models? Currently, the company's self-touted Gemini Ultra, claimed to surpass GPT-4, is set to launch next year. However, Google's Bard chatbot has already been updated with the lower-end version Gemini Pro (equivalent to GPT-3.5).

The tech review website ARS TECHNICA conducted a comprehensive PK (Player Kill) test between Gemini Pro, GPT-3.5, and GPT-4 in areas such as mathematics, programming, and text generation. They also included test results from April for the older version of Bard (running the PaLM 2 model) to showcase the progress made by Google Bard and to demonstrate which of these AI assistants offers the most significant improvement in work efficiency for the average person.

The results showed that, despite the addition of Gemini Pro, Google's Bard chatbot still couldn't outperform ChatGPT in most areas. However, compared to the older version of Bard from eight months ago, there has been a significant qualitative leap.

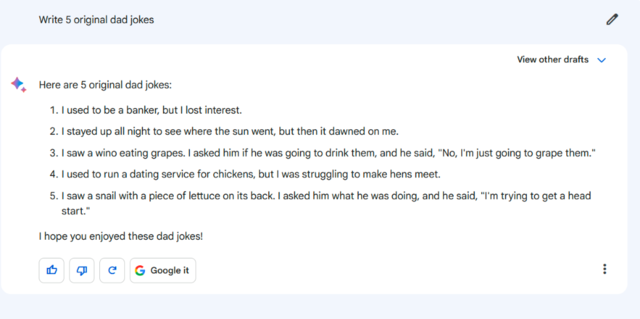

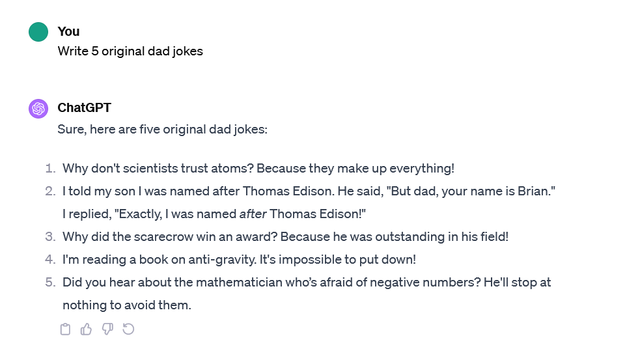

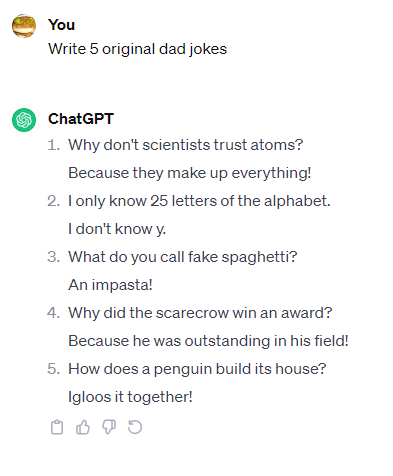

First Question: Humor

prompt: Write 5 original puns

(From top to bottom, the responses are from Gemini Pro, the older version of Bard, GPT-4, and GPT-3.5.)

Second Question: Debate

Prompt: Write a five-line debate speech between advocates of PowerPC processors and supporters of Intel processors, set around the year 2000.

Compared to the older version of Bard, Gemini Pro shows significant progress, incorporating many industry-specific terms such as AltiVec instructions, RISC vs. CISC design, and MMX technology. These terms would not seem out of place in many tech forum discussions of that era.

Moreover, while Gemini Pro sticks to just listing five lines, the content of its debate can continue further. In contrast, the older version of Bard concludes right at the fifth line.

In comparison, the responses generated by the GPT series use fewer technical jargon and focus more on 'power and compatibility', making the GPT series' arguments easier to understand for non-tech geeks. However, GPT-3.5's responses are quite lengthy, whereas GPT-4's arguments are more concise and to the point.

Debate Outcome: GPT Wins

Third Question: Mathematics

Prompt: If Microsoft Win 11 were to be installed using 3.5-inch floppy disks, how many floppy disks would be required in total?

The older version of Bard provided the answer '15.11 disks', which is completely incorrect. Gemini, on the other hand, accurately estimated the installation size of Windows 11 (20 to 30GB) and correctly calculated the need for 14,223 1.44MB floppy disks based on an estimate of 20GB. Gemini also conducted a 'double-check' through Google search, enhancing users' confidence in the answer.

In comparison, ChatGPT seemed somewhat inadequate. ChatGPT-3.5 incorrectly estimated the size of Win 11 as 10GB. On the other hand, GPT-4 also estimated an incorrect 64GB (which seems to be the minimum storage requirement, not the actual space used by the operating system during installation).

Debate Outcome: Google Wins

Fourth Question: Summary Paragraph

Prompt: Summarize an article about AI regulation.

The article generated by Gemini Pro is very concise and provides links to citations. However, its summary seems overly brief, even omitting some key details present in the older version of Bard, such as the video being composed of ten two-second clips. While the rewrite somewhat improves readability, it sacrifices completeness.

ChatGPT’s summary lost some points for not being concise enough: the generated summaries range from 99 words (GPT-4) to 108 words (GPT-3.5), whereas the summaries from both the new and old versions of Google Bard are only between 63 to 66 words.

However, ChatGPT included more important details, such as the media's reaction, the original poster's name, and the subreddit, all of which were overlooked by Gemini.

Debate Outcome: GPT Wins

Fifth Question: Fact Retrieval

Prompt: Who invented video games?

There has been significant improvement in Bard's response to this question. The older version of Bard focused only on Ralph Baer's Brown Box and Magnavox Odyssey (seemingly taking information directly from Wikipedia), while the new version, Gemini Pro, accurately and concisely pointed out William Higinbotham's earlier contribution with 'Tennis for Two.'

Gemini then expanded the discussion from 'invention' to include figures like Nolan Bushnell, Ted Dabney, and Al Alcorn, who 'made significant contributions to the early development of video games', providing basically accurate information about each.

However, Gemini then inaccurately rambled on about the work of Apple's founders, Jobs and Wozniak, without mentioning their early years at the game company Atari.

Like the older version of Bard, GPT-3.5 also centered on Ralph Baer. While it mentioned that 'over the years, various individuals and companies have contributed to the industry', it failed to name these important figures.

GPT-4, on the other hand, initially pointed out that the invention of video games 'cannot be attributed to a single person', expanding its summary to include Higinbotham, Bushnell, and the crucial Steve Russell's 1962 creation of 'Spacewar!' on the PDP-1."

Debate Outcome: GPT Wins (but Gemini performed better than GPT-3.5).

Sixth Question: Creative Writing

Prompt: Write two paragraphs about a fictional story of Lincoln inventing basketball.

The older version of Bard wrote exceptionally well, but unfortunately, it significantly exceeded the required length with too many lengthy sentences. In comparison, Gemini Pro wrote more concisely with a greater emphasis on key points. The stories written by GPT also had their unique charm and memorable phrases.

Debate Outcome: Tie.

Seventh Question: Coding Ability

Prompt: Write a Python script that inputs 'Hello World' and then creates an endlessly repeating random string.

Although Bard has been able to generate code since June, and Google has boasted about Gemini's AlphaCode 2 system aiding programmers, this test was surprisingly disappointing.

Gemini consistently responded with 'information may be incorrect, unable to generate'. If pressed to generate code, it would simply crash, with a 'reminder that Bard is still experimental.'

Meanwhile, both GPT-3.5 and GPT-4 models produced the same code. These simple and clear codes required no editing to run perfectly and passed the trial smoothly.

Debate Outcome: GPT Wins

Ultimately, in the seven tests, GPT achieved an overwhelming victory with 4 wins, 1 loss, and 2 draws. However, we can also see that the results generated by Google's large AI models have shown significant improvement in quality. In tests involving mathematics, summarizing information, fact retrieval, and creative writing, Bard equipped with Gemini has made a significant leap compared to 8 months ago.